- Setting up Auditing & Logging of Files/Objects Using Native Windows File Server Tools - 16th October 2020

- Designing Key Performance Indicators (KPI) - 15th July 2020

- DDOS Attacks and Website Hacking - 6th July 2020

Very recently, this website suffered a Distributed Denial of Service (DDOS) attack. Following on from that, I thought I would write a short blog post on what happened, how I found out about it, the response to recover from the attack, and the lessons learnt.

Firstly, this article will be heavily focused on AWS as this website runs on AWS. However, the general principles of investigations, logging and monitoring etc. remain applicable to any type of website and web infrastructure.

I received notification of the DDOS through an alert from Uptime Robot. The website was unresponsive to all HTTPS requests. Furthermore, I was unable to SSH into the server – it was unresponsive to SSH requests too. This unfortunately meant it was not possible to see which process on the server was hogging all the resources.

I had also by this time received email alerts from AWS CloudWatch alerting me to CPU over utilisation. At this point the most likely culprit was emerging as a typical DDOS attack, as it’s very unlikely a previously perfectly working server, fully updated to the latest builds and security patches, would misbehave like this to the extent of not having enough memory or CPU capacity to even respond to SSH requests.

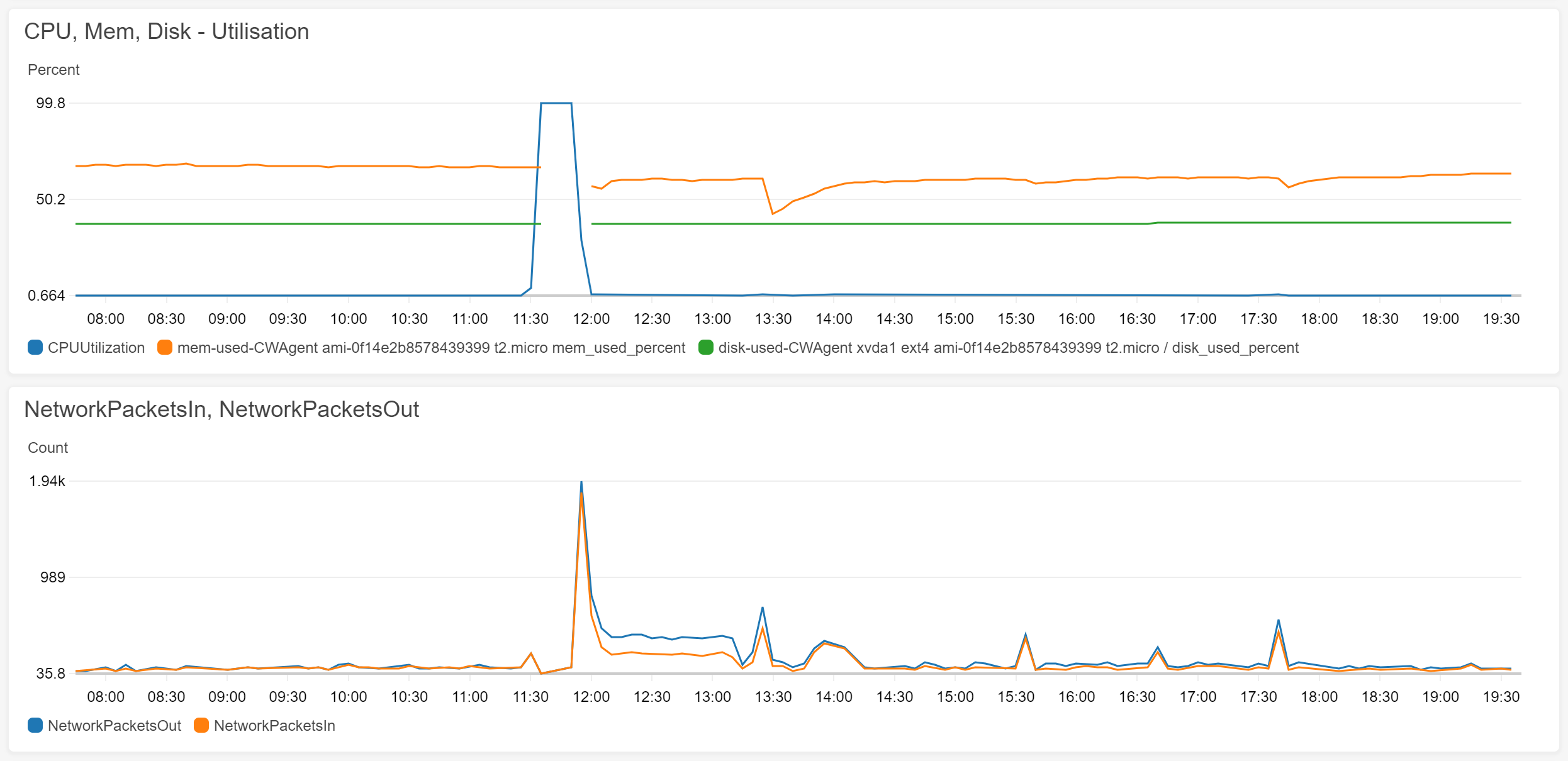

By looking into the monitoring metrics below, it was clear that there was an extraordinary spike in CPU utilisation currently underway that was simultaneously occurring as network traffic was spiking too.

This is inline with DDOS attacks – externally incoming network traffic causing server overload. However, there was also a smaller chance that the server could have been compromised in some way and was initiating the traffic itself, which in turn resulted in the processing capacity spiking.

This can be easily determined by looking at the logs that are being collected by AWS CloudWatch. The logs collected by CloudWatch in fact were the only logs available at this point – due to the server over-load causing SSH login to not be possible, it was also not possible to log onto the server directly and look at the web server logs that way.

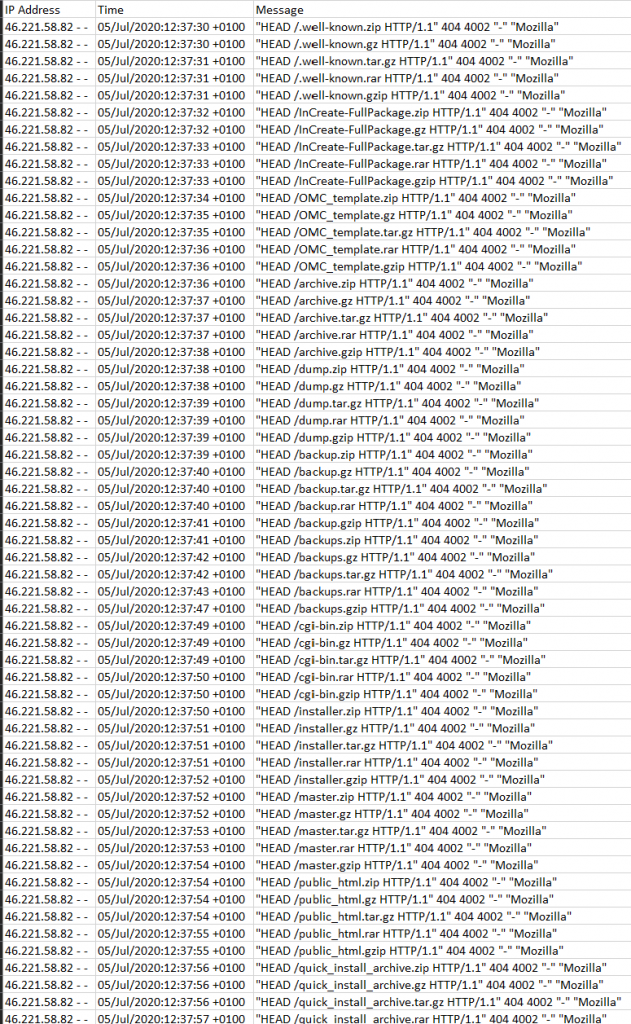

By looking at the web server access log in Cloudwatch, below, it is clear that there is extraordinary activity going on.

DDOS attack log

As you can see from the picture above, someone is using HTTP HEAD requests at the rate of twice every second to obtain meta data. This means that the server is consistently being asked, by one IP address, to provide meta-data about system files, at the rate of once every 30 seconds. i.e. someone is asking the web server to provide data about data (not the data it self, but the meta data) using the HTTP HEAD method.

An HTTP flood/cache-busting (Layer 7) Attack

For a small server like this one, this is enough to flood the CPU with enough tasks over a small period of time to max out the capacity and cause the system to hang. Specifically, the attack underway was an OSI Model Layer 7 (application layer) HTTP flood/cache-busting attack.

This is an application layer attack that is designed to swamp the server with requests. The requests don’t actually have to be meaningful, in this specific case they were just repetitive requests asking for information about certain files. They never asked for the files themselves, mainly because access would have been denied (and thus not much further CPU processing would have taken place, clearly defeating the purpose of sending a request whose real intent was to overwhelm the server), but also I suspect because had the files been accessible, the returned data in turn would have flooded the attacking servers.

The attackers actual motive is to cause the server CPU to get busy and work on requests. Once the requests hit a certain scale/volume, in this case twice every second, they flood the CPU with so much activity that it is unable to deal with them and crashes.

Network Traffic and CPU Load

One thing clearly being shown by this attack is that the attacker does not need to generate a lot of incoming traffic to successfully overload CPU resources. i.e. CPU load is linked not to the size of network traffic, but to the volume.

All the attacker needs is to generate enough request, to overload the server’s resources. The size of the data that forms the incoming request isn’t important. Thus, the attacker merely needs to generate many small sized requests that flood the CPU.

This saves resources on the attacker’s side as they don’t need to expend their system’s resources to generate large sized requests and also conserves the attackers outgoing network bandwidth.

In a DDOS attack, it’s quantity, not specifically the size of incoming requests that matter. This is one of the reasons why identifying HTTPS Flood style of DDOS attacks is difficult – filtering out incoming server requests by trying to identify a high number of incoming data packets associated with those requests wouldn’t work as those requests don’t have a high number of packets.

The Fix

Is relatively simple. All that was needed was to blacklist the IP address of the attacker on the firewall, and clear the server CPU cache, which can be done via restarting the server.

That’s actually what I did – I added that IP address to the AWS firewall ACL to deny traffic from that IP address, and I brute forced the restart of the server via the management console.

Because the next attack attempt (alas, another DDOS attempt is an inevitable part of life managing a web server) might bypass the default AWS DDOS prevention system like this one did and could come from an unknown IP address, configuring the web server to prevent a repetition of the same type of attack succeeding was also required.

For this, after the server was up and running, I SSH’d into the server and configured the web engine to refuse serving more than one HTTP HEADER request every two seconds for certain directories (a selection of those listed in the logs).

I also saw regular attempts at exhausting server resources by having it return data in xml format. Thus, I also configured the server to reduce the xml limit size to conserve on server resources.

Login Attempts

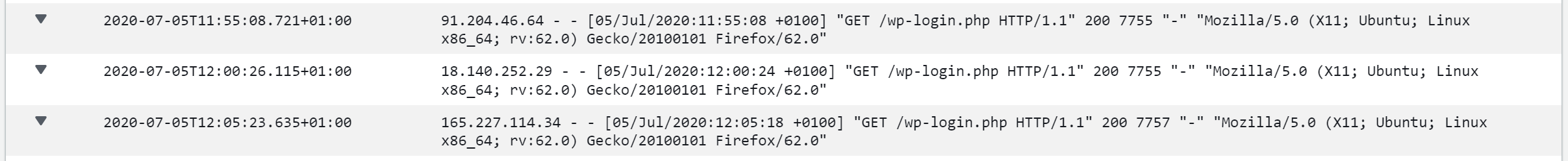

Looking at the logs also showed another standout item – login pages were regularly being requested as well. As shown below, there were, for e.g. requests, from different IP addresses, to access the WordPress login page every 5 minutes. There were also other regular requests to access other login pages such as phpMyAdmin.

The regularity in the WordPress login page access attempts (once every 5 minutes) from different IP addresses is quite remarkable. It clearly shows a coordinated attempt to gain unauthorised access to the applications that run on the sever. It was as if someone had configured systems behind those IP addresses, which if they were genuine human users would obviously never have been this synchronised, to attempt a login every 5 minutes.

This highlights an attempt by the attacker to automate a brute force attempt at gaining access. The attacker doesn’t really know much about the system. They are just guessing what the password should be and have setup an attempt to guess a password every 5 minutes, because some systems (they don’t know if this system is like that, they are just guessing afterall) are configured to have a password timeout of 5 minutes.

One thing to point out is that, due to the difference in timings of the DDOS attack and the login attempts, as well as the different geographies the IP addresses were located in (I did a search on who owned those IP addresses and where they were located as part of the investigation), I don’t think the DDOS attack and login attempts are linked.

Hacking Is Literally Hacking

The above information shows that hacking is just that – hacking. As the definition of the word says, it is usually an in-elegant way of gaining entry or breaking something, i.e. hacking your way into something or hacking (breaking) something into pieces.

This does not imply that it’s something to not respect. On the contrary, it means that it can be very, very effective. It just means that it is not something to be particularly frightened off instigated by a super genius like the way Hollywood likes to portray. Take this case for example, the fix was simply brute forcing a physical restart of the VM, and configuring the apache server to restrict some of the requests timeouts and sizes of files it would server back.

Looking further at this DDOS case, all the attacker was really doing was sending HTTP Get (or Header) requests, repeatedly, and quickly in an effort to flood the server with requests, fill up it’s cache and subsequently cause it to fail. It did this by sending 2 requests every second for half a minute, which, for a small server fitting the profile of this type of website, easily caused it to be over loaded.

Obtain Alerts Via an App

For small websites with IT staff not always on site or a dedicated incident monitoring and response team with their own separate infrastructure, it’s best to always have an independent monitor alerting you, loudly, when your website is down. I use Uptime Robot as it has, most importantly, alerting, but also monitoring and configuration functionality, via an app available for free. Other alternatives are available, however, they either only provide alerts via email, which is not ideal for something this important, or charge a fee for in app alerting.

Setup Monitoring and Logging on CloudWatch

As previously mentioned, it's advisable to setup logging and monitoring via CloudWatch. This has the benefits of providing logging capability of CPU and memory usage statistics as well as apache access and error logs which will assist in investigations. Additionally, it will provide alerting functions via email, too.

No AWS support under basic support plans

AWS does not offer any support on its basic support plans (i.e. the free one). This means that AWS will also not offer any features to prevent future attacks, or easily even accept any information from you to help them make future plans for preventing future attacks. Any steps taken, or features adopted, would have to be done without their input.

Application Layer Attacks Are Growing in Frequency and Are Not as Easily Detected as Infrastructure Layer Attacks

AWS does attempt to provide DDOS protection as standard and for free through is AES Shield Standard Offering. This is automatically available to all AWS customers and is enabled by default.

However, Layer 7 attacks, such as in this case an HTTP flood/cache-busting attack are difficult to identify and filter out.

This does not mean that everybody has to purchase an additional subscription to AWS WAF and AWS Shield Advance systems. This is because it’s not certain that those systems would fare any better in filtering out the malicious traffic.

Rather, configuring the web server to mitigate problems might be a better solution before purchasing an additional feature that is not guaranteed to work. Apache configurations, such as in my case where I have limited the size of XML requests, can also be done to limit resource consumption triggered by client input.

Log-in Pages and Web Sites are Routinely Probed for Weaknesses and Unauthorised Entry by Malicious Actors

The investigation proved, once again, that websites generally are targeted for attacks by malicious actors (such as hackers) as standard fare. In fact, it’s best to think of it as a certain that any website, no matter how big or small, will be probed for weakness or an entry point by hackers.

The key takeaway, especially in these days of remotely logging into systems, is to have strong passwords, multi-factor authentication – MFA (which this site has), and if possible, a whitelist of external IP addresses that are only allowed certain types of entry, such as admin access.

This final point however might not always be practical for large businesses who may have employees travelling all over the world and logging in from any IP address in the world. Thus relying on MFA and strong passwords would be the preferred, and possibly only currently practical approach.

My previous blog on setting up a web server goes into details of how web servers can be secured in general and expressly targeted login pages such as phpMyAdmin can be secured.

Related posts:

Z Tech Blog

Z Tech is a technologist, senior programme director, business change lead and Agile methodology specialist. He is a former solutions architect, software engineer, infrastructure engineer and cyber security manager. He writes here in his spare time about technology, tech driven business change, how best to adopt Agile practices and cyber security.