- Setting up Auditing & Logging of Files/Objects Using Native Windows File Server Tools - 16th October 2020

- Designing Key Performance Indicators (KPI) - 15th July 2020

- DDOS Attacks and Website Hacking - 6th July 2020

In the Inside the Cloud Series, we looked at the individual components of the cloud in more detail. In this article, we will build on that and put all those components together and see how they combine to create a scalable, highly resilient self-healing Cloud.

Summary / TL;DR

A user connects to our DNS servers and is forwarded to either a CDN, a load balancer, or directly to a server.

If needed, the CDN will connect to the load balancer to obtain data it currently does not have in its cache.

The load balancer will forward the connection, as required, to the optimum server.

The server will be housed in a network zone. Each zone has it’s own subnet exclusively for its use. Zones can be connected to each other. Zones and subnets can be thought of as one and the same and their usage in documentation is interchangeable.

Each zone is secured by a firewall. All servers thus sit behind a firewall.

The firewall filters out the traffic based on a set of rules. The set of rules contain ports, source addresses and destination addresses. Range of addresses and ports can be used in a rule.

The firewall rules for each zone will be different, based on the servers that are placed within the zone. For e.g. a zone with customer facing web servers hosting a website will have firewall rules enabling incoming connections for HTTP and HTTPS from anywhere in the world. However, a DB zone that is not meant to have any direct connections with the internet and has servers that will only be used by applications running on servers within the DMZ will have firewall rules allowing only connections from the servers within the DMZ on ports that are used by DBs.

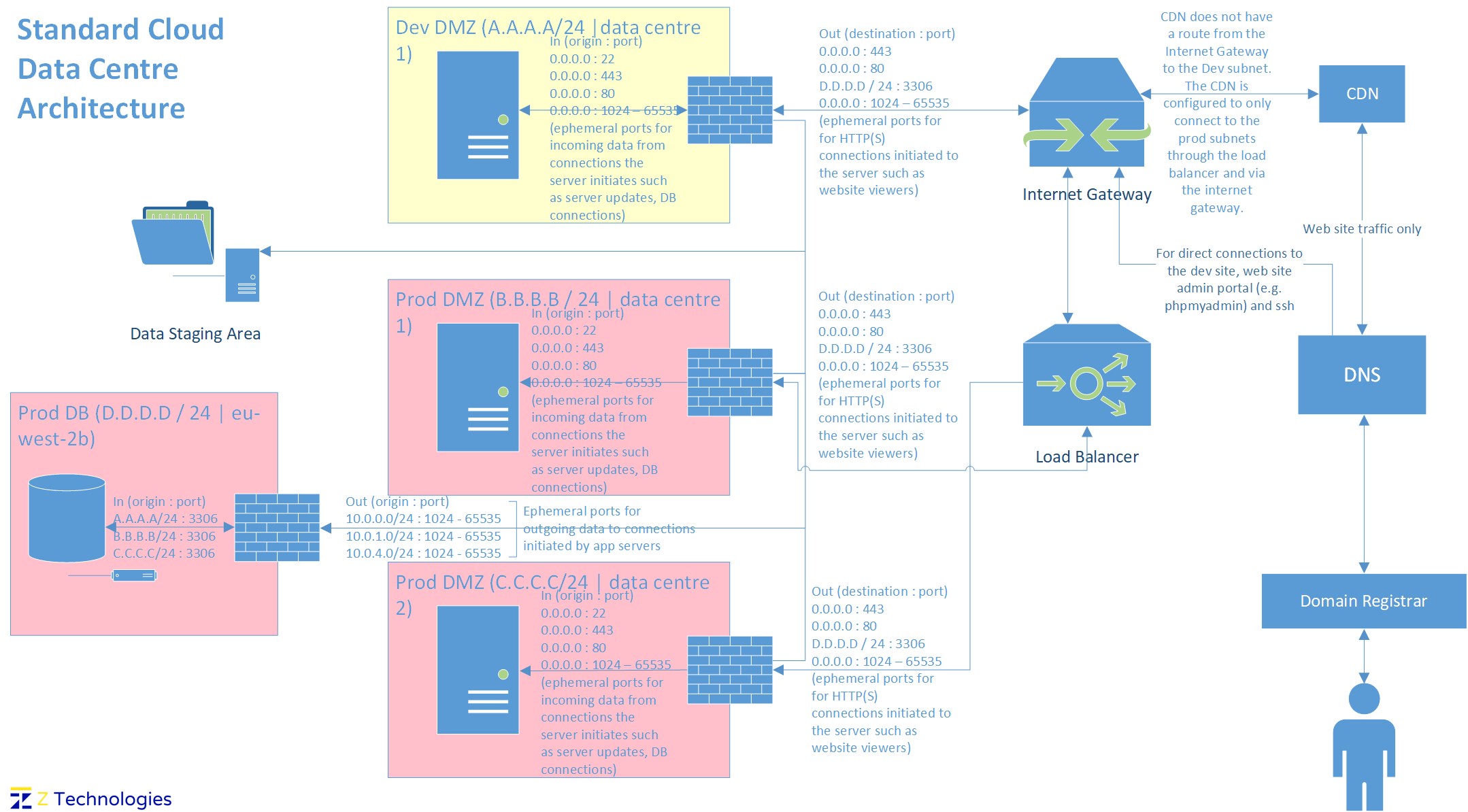

The architecture diagram below illustrates all the components, their connections and how they are used.

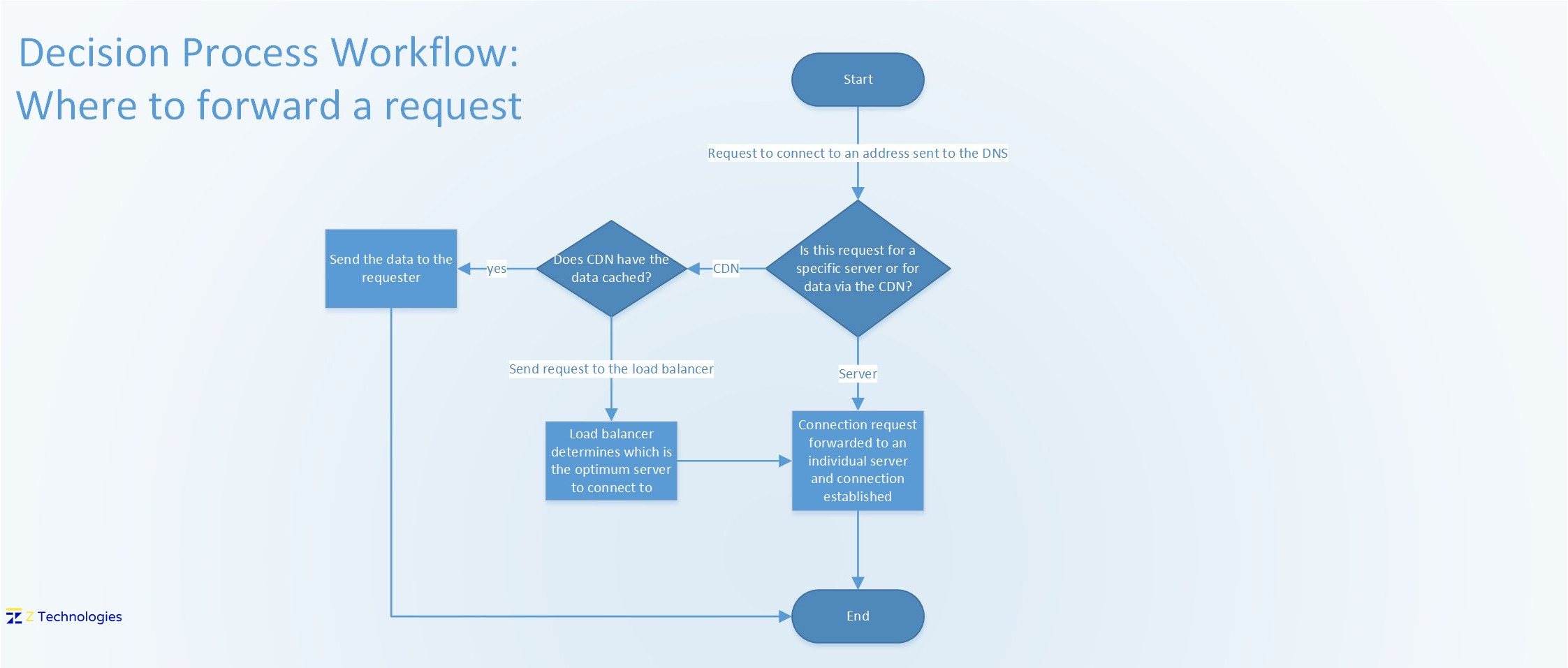

Connecting to a CDN or Server

A customer will first connect to the Cloud’s DNS servers, which will determine if the request is either for a specific server, or resolves to go directly to our load balancer, or should be passed to the CDN. This decision is based on the URL the user has entered and the data the DNS server holds in its’ DNS records table for that URL. The workflow below shows the path a request will take. Essentially, if the request can go to the CDN, then it will be passed to the appropriate CDN server. If the CDN has the data cached, it will respond back with the data. However, in the case that the data is not present on the CDN, then the CDN will have to connect to one of the application servers and obtain the data. In this situation, the CDN will connect to the load balancer which will then handle the request by forwarding the request to one of the servers in it’s server pool which will send the data back.

Similarly, if the request to the DNS resolves to the load balancer then the user will be forwarded directly to the load balancer, which will determine which server from it’s resource pool to forward the request to.

Scaling and Self-Healing

As we learnt in our article on load balancers, it is the load balancer that introduces the feature of self-healing and scaling in a cloud.

Server clusters and nodes

The way modern day solutions are designed is to have multiple servers, the same as each other, serving user requests. In this collection of servers, called a cluster, each server (called a node) can each take a user request and will process it in the same way as any other node in the cluster. For e.g. the same application, configured in the same way, can be installed on each node. The application on each cluster (i.e. each node within the cluster), can then connect to the same database (DB) cluster (i.e. the cluster connects to another cluster because the apps on the nodes connect to the same cluster). In this way entire solutions can be broken down into different components with multiple similar components each configured the same in order to have fault-tolerance and scalability.

Server Images

Furthermore, in each modern day solution, the servers are built from a template (called an image) that is used to create the copies of the server. This means that each server is always configured the same as each other – can connect to the same databases, has the same applications, configurations etc. because they are all built from the same template.

Scaling and Self-Healing

It is the job of the load balancer to continuously check the load of the servers, and introduce new servers based on the server image when the server load on existing servers increases beyond a threshold – i.e. scale when the traffic to servers increases. Similarly, it is also the job of the load balancer to monitor the health of the servers, and if one is unresponsive, introduce a replacement server into the cluster based on the server template. In this way, the infrastructure is scaled and self-heals – the number of servers increases (and are shut down and destroyed when needed) and are replaced based on the decisions the load balancer makes.

Direct connections

It is also possible (although not shown in the workflow diagram above) for some cases, particularly within smaller organisations that either do not have a CDN or where IT admins work remotely and need to log into a server directly (perhaps a single bastion server or to work on a single internet facing app server), that the DNS will forward the user directly to the server, or the user will know the specific IP address and use that to connect to the server directly.

Different zones and data centres

In our article on IP addresses, we learnt that zones are used to house servers of a similar type together, each zone has it’s own range of IP addresses, which means each zone has it’s own subnet (and it can be thought of as a zone = subnet), and for cyber security purposes each zone sits behind a firewall. Here, I’d also like to reinforce another very important cyber security concept – availability and redundancy

Availability and redundancy.

A highly available architecture means that there are multiple server (either multiple clusters and/or multiple servers in one cluster, but definitely multiple servers hosting the application) in multiple locations that a user request can be sent to or a user can be connected to. The benefit of this is that if one data centre housing the servers is down in any way (for e.g. a natural disaster), or a zone get’s compromised (for e.g. a virus, such as a ransomware attack), there will be servers and devices that are still available as they are physically located inside a different data centre in a different location and protected by being behind a firewall and on a different subnet.

Of course, some of these zones (in network-speak, subnets) are connected to each other, so there is always a risk of a virus infecting multiple zones, however, as the traffic between the subnets is regulated by a firewall, the risk is acceptable.

In our example architecture, we have two data centres (Data Centre 1 and Data Centre 2, with Data Centre 1 hosting one development zone (Dev Zone), one production zone (Prod Zone) and one Prod DB Zone for DBs. There is another data centre (Data Centre 2) that hosts another Prod Zone. Because our example architecture has the Prod Zones available to users from outside the network (i.e. the internet), we are calling these zones Prod DMZs (Demilitarized Zones).

Of course, depending on the application solution design, we can always have more zones in the data centres if needed. Additionally, the load balancers and CDN is also made up of servers and will be housed in one or both of the data centres. We could also have more than one data centre if needed.

Segregation

Another important cyber security concept is segregation. We should have different type of servers in different zones, so that the network and firewall of each zone can be configured differently. In our example, the solution does not require data base (DB) servers to be used directly by the users. They are used by the application servers that connect to the DB servers, with the users connecting to the application servers. Thus, we increase security by segregating the DB servers and having no direct connection to the an external network (like the internet).

Firewall configuration

In our example, looking at our architecture, we can see that the Prod DMZs face the outside world (they will be used to host websites and web applications and anything else that users from the internet can connect to). We also have a Prod DB zone that can host any DBs our application servers need (modern day websites and web applications for e.g. host some of their data on DBs such as product details) but are not directly internet facing (as users will connect to the application servers, which in turn will then connect to the DB as needed).

The firewall rules for each zone are subtly different, based on the servers within them and their purpose. In this way, the firewall allows some traffic, and denies other traffic, thereby securing the Cloud.

| Rule Type | Prod DMZ | Dev DMZ | DB Zone |

| Global incoming SSH | Yes | Yes | No |

| Incoming global HTTPS and HTTP | Yes | Yes | No |

| Global incoming Ephemeral Ports | Yes | Yes | No |

| Global outgoing HTTPS and HTTP | Yes | Yes | No |

| Global Outgoing Ephemeral Ports | Yes | Yes | No |

| Outgoing MySQL to the DB Zone | Yes | Yes | No |

| Incoming MySQL from Prod and Dev DMZ | No | No | Yes |

| Outgoing Ephemeral Ports to Prod and DMZ | No | No | Yes |

Table 1: A summary of the differences between the firewalls of each Zone

As you can see in Table 1 above, the DB Zone is the most restrictive (this would obviously be for the best especially, if for e.g. the DBs would contain sensitive data such as customer addresses). For e.g. it allows no traffic in except for data for it’s MySQL servers and that too only originating from the Prod and Dev DMZs. Further details on the Firewall rules in our example and the reasons for them is as follows.

- Incoming Dev and Prod DMZs Firewall rule sources

- Allow incoming connections from anywhere in the world for IT admins (as we could have a global team based anywhere in the world) to use SSH (in the diagram 0.0.0.0 : 22 – SSH uses port 22)

- Allow incoming HTTP and HTTPS connections from anywhere in the world for webservers running our websites and web applications (as our website can have global visitors whose IP addresses we do not know). HTTP uses port 80 and HTTPS uses port 443 (in the diagram 0.0.0.0 : 80 and 0.0.0.0 : 443).

- Allow incoming data on ephemeral ports for data our website has requested from other servers. Those other servers that can be located anywhere in the world (for e.g. for verifying debit card transactions) hence the rule with a source of 0.0.0.0 : 1024 – 65535 in the diagram

- One thing to note, as our servers could be hosting any type of operating system (OS), of any version, and it is the OS that determines the ephemeral port randomly from a range at runtime, we have chosen to open the common ephemeral ports for all OSs. We could further improve security by mandating a policy whereby IT must only use a certain version of OSs and restrict the range of the ephemeral ports. Furthermore, we could also enact a policy where incoming data on ephemeral ports must be from known sources (however, this might not always be practical as it would mean services that we connect to must tell us their server’s IP addresses and those IP addresses cannot change).

- Another thing to note is that the web application would need to connect to it’s DB and receive data. For this, we know the subnet address range of the DB Zone. However, we don’t have a rule specifically for this, because the incoming ephemeral port rule with any source IP address will cover this traffic. However, for zones that don’t have web servers, and hence wouldn’t have this rule, we would add an incoming rule covering the DB zone and ephemeral ports (D.D.D.D / 24 : 1024 – 65535)

- Deny all other incoming connections. For security purposes, any other type of connection on any port is not allowed and will be denied, as they are outside the purposes of the servers in the zones.

- Outgoing Dev and Prod DMZs Firewall rules

- Allow outgoing connections to anywhere in the world over HTTP and HTTPS to connect to servers our web applications need data from (for e.g. payment websites). HTTP uses port 80 and HTTPS uses port 443 (in the diagram: 0.0.0.0 : 80 and 0.0.0.0 : 443)

- Allow outgoing connections to the DB zone for MySQL DBs which use port 3306 (in the diagram D.D.D.D / 24 : 3306)

- Allow outgoing data on ephemeral ports for users that have requested data. The user’s OS will determine the ephemeral ports and as our website’s users can be located anywhere in the world and using a device running any type of OS we have setup a rule for the entire ephemeral rule port range (in the diagram: 0.0.0.0 : 1024 – 65535)

- Deny all other types of outgoing data for security purposes. This is mainly done to ensure no malicious outgoing data is possible.

- Incoming DB Zone Firewall rules

- In our example, because the DBs used are only MySQL ones, and the DB Zone will not have any other server hosted in it except DB servers, and the DB servers are not meant to be used by any other user except web servers, the DB Zone firewall only allows incoming data from the Dev or Prod DMZs for MySQL (in the diagram: A.A.A.A /24 : 3306, B.B.B.B / 24 : 3306, C.C.C.C / 24 : 3306)

- All other data and types of connections are denied

- Outgoing DB Zone Firewall rules

- Ephemeral ports for severs in the Dev and Prod DMZs (in the diagram: A.A.A.A /24 : 1024 - 65535, B.B.B.B / 24 : 1024 - 65535, C.C.C.C / 24 : 1024 - 65535)

Conclusion

With these components in place, and the network configured, data will flow from a user to a CDN to a load balancer to an application server to a DB and then back to the user (phew!!!). This has been a generic example to show what components make up a cloud and how those components fit together. The principles covered in this blog post are applicable to all types of Clouds and data centres.

If you would like to put these concepts to practical use, then please check out my turorial on how to build a web server.

Related posts:

Z Tech Blog

Z Tech is a technologist, senior programme director, business change lead and Agile methodology specialist. He is a former solutions architect, software engineer, infrastructure engineer and cyber security manager. He writes here in his spare time about technology, tech driven business change, how best to adopt Agile practices and cyber security.

Hey. Thanks for publishing this article. It helped, and the entire cloud series, helped in my understanding of what the cloud means and how it all fits together.

I’d be interested in finding out some more about the specifics. Like how things work at with AWS. And also how web servers are built and put together and how that fits.

Thanks.

Hey, Tony. Thanks for leaving feedback – it’s greatly appreciated.

Funny you should mention building a web server, I’m actually working on an article just for that and it will hopefully be published soon!

Thanks again for the feedback,

Z Tech.